RESEARCHES

Smart Vision & Robotic Sensing

Professor, Robotics Laboratory

Smart Innovation Program, Graduate School of Advanced Science and Engineering

Hiroshima University

Smart Innovation Program, Graduate School of Advanced Science and Engineering

Hiroshima University

Idaku ISHII

- >> Research Contents

- In order to establish high-speed robot senses that are much faster than human senses, we are conducting research and development of information systems and devices that can achieve real-time image processing at 1000 frames/s or greater. As well as integrated algorithms to accelerate sensor information processing, we are also studying new sensing methodologies based on vibration and flow dynamics; they are too fast for humans to sense.

Automatic Laboratory Mice Behavior Quantification Using HFR Videos

In this study, we propose an improved algorithm for multiple behavior quantification in laboratory mice by means of HFR video analysis. This algorithm detects when and where a mouse performs repetitive movements of its limbs at dozens of hertz in HFR videos and quantifies these behaviors by calculating the frame-to-frame difference features in four segmented regions the head, the left side, the right side, and the tail.

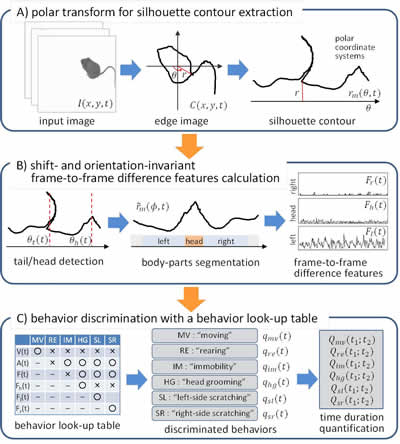

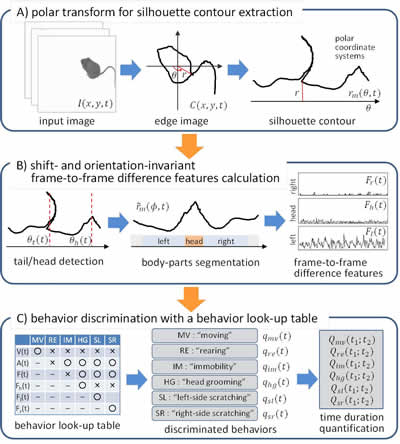

The algorithm can quantify multiple model behaviors independently of the position and orientation of the animal by analyzing its silhouette in a polar coordinate system, whose angular coordinate is adjusted according to the head and tail positions. Our algorithm is divided into three parts: A) Polar transform for silhouette contour extraction B) Shift- and orientation-invariant frame-to-frame difference feature calculation C) Behavior discrimination using a behavior look-up table In this study, six model behaviors were detected using frame-to-frame difference features in the four segmented regions: moving, rearing, immobility, head grooming, left-side scratching, and right-side scratching.

The algorithm can quantify multiple model behaviors independently of the position and orientation of the animal by analyzing its silhouette in a polar coordinate system, whose angular coordinate is adjusted according to the head and tail positions. Our algorithm is divided into three parts: A) Polar transform for silhouette contour extraction B) Shift- and orientation-invariant frame-to-frame difference feature calculation C) Behavior discrimination using a behavior look-up table In this study, six model behaviors were detected using frame-to-frame difference features in the four segmented regions: moving, rearing, immobility, head grooming, left-side scratching, and right-side scratching.

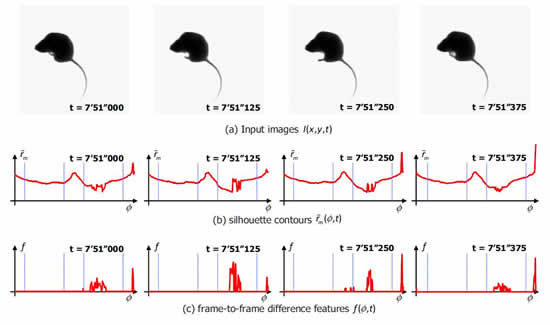

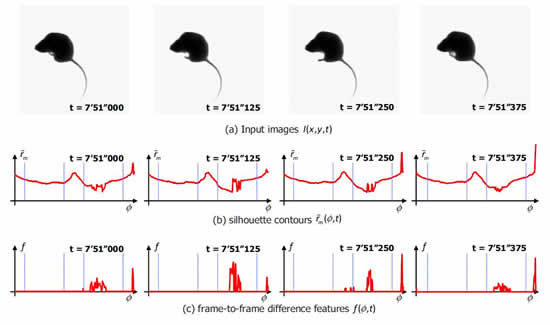

For verifying the proposed behavior recognition algorithm, we recorded HFR videos of three ICR mice. The lower figure shows a sequence of input images, silhouette contours, and frame-to-frame difference features in the case of left-side scratching. The detection correct ratios in the automatically determined results were above 80% for all the 3 ICR mice, compared with the manually observed results; these ratios are sufficiently high for most quantification requirements in animal testing experiments.

The algorithm can quantify multiple model behaviors independently of the position and orientation of the animal by analyzing its silhouette in a polar coordinate system, whose angular coordinate is adjusted according to the head and tail positions. Our algorithm is divided into three parts: A) Polar transform for silhouette contour extraction B) Shift- and orientation-invariant frame-to-frame difference feature calculation C) Behavior discrimination using a behavior look-up table In this study, six model behaviors were detected using frame-to-frame difference features in the four segmented regions: moving, rearing, immobility, head grooming, left-side scratching, and right-side scratching.

The algorithm can quantify multiple model behaviors independently of the position and orientation of the animal by analyzing its silhouette in a polar coordinate system, whose angular coordinate is adjusted according to the head and tail positions. Our algorithm is divided into three parts: A) Polar transform for silhouette contour extraction B) Shift- and orientation-invariant frame-to-frame difference feature calculation C) Behavior discrimination using a behavior look-up table In this study, six model behaviors were detected using frame-to-frame difference features in the four segmented regions: moving, rearing, immobility, head grooming, left-side scratching, and right-side scratching. For verifying the proposed behavior recognition algorithm, we recorded HFR videos of three ICR mice. The lower figure shows a sequence of input images, silhouette contours, and frame-to-frame difference features in the case of left-side scratching. The detection correct ratios in the automatically determined results were above 80% for all the 3 ICR mice, compared with the manually observed results; these ratios are sufficiently high for most quantification requirements in animal testing experiments.

|

WMV movie(3MB) six model behaviors |

|

Reference

- Yuman Nie, Takeshi Takaki, Idaku Ishii, and Hiroshi Matsuda : Algorithm for Automatic Behavior Quantification of Laboratory Mice Using High-Frame-Rate Videos, SICE Journal of Control, Measurement, and System Integration, Vol.4, No.5, pp.322-331 (2011)

- Yuman Nie, Takeshi Takaki, Idaku Ishii, and Hiroshi Matsuda: Behavior Recognition in Laboratory Mice Using HFR Video Analysis, Proc. IEEE Int. Conf. on Robotics and Automation, pp.1595-1600, 2011.